NetBackup 7.6 Features:

1. Sybase SQL Anywhere database (NetBackup catalog) has been upgraded to version 12.0.1

¦ Automatic tuning of server threads

¦ Column statistics management

¦ Improved indexing performance

¦ Faster validation of large databases

¦ Improved request prioritization

2. The new NetBackup status code 2111 has been added to this release with the following description:

All storage units are configured with On Demand Only and are not eligible for jobs requesting ANY storage unit

3. Support for 64-bit NDMP devices

4. NetBackup utility enhancements – NBCC, NBCCA, NBCCR, and nbsu utilities

5. Hot fix / EEB preinstall checker

6. Catalog enhancements

- Catalog backup performance

- Catalog compression enhancements

7. NetBackup Logging Assistant – to set up, collect, and upload debug logs and other information to Symantec Technical Support

NetBackup 7.6 Status Code Additions:

Cleanup of status code 156 – These status codes include numbers 4200-4222

NetBackup 7.6 Command Additions:

1. bpplcatdrinfo – List, modify, or set disaster recovery policy

2. nbgetconfig – This command is the client version of bpgetconfig. It lets you view the local configuration on a client.

3. nboraadm – This command manages the Oracle instances that are used in Oracle backup policies.

4. nbrestorevm – This command restores VMware virtual machines

5. nbsetconfig – This command is the client version of bpsetconfig. It lets you edit the local configuration on a client

6. nbseccmd – runs the NetBackup Security Configuration service utility

7. configurePorts – This command is used to configure the Web ports for the Web Services Layer (WSL) application on the master server.

- bpclntcmd command logs messages to the usr/openv/netbackup/logs/bpclntcmd directory

- Vault log file location – install_path\NetBackup\vault\sessions\vault_name\sidxxx\logs\

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

NetBackup 7.5 Features:

1. NetBackpu Replication Director – offers a unified, policy-based management of backups, snapshots and replication(Snapvault, Snapmirror)

2. Virtualization – Support for vSphere 5, Granular recovery for Exchange and SharePoint virtual machines, New policy-type for VMware & Hyper-V

3. NetBackup Search – Search across multiple domains, save, edit, and export and search queries; is a licensable feature.

4. DeDuplication – Integration of Auto Image Replication for media server deduplication

5. OS Compatibility Additions

6. OpsCenter Enhancements

7. Accelerator – offers intelligent, streamlined backups to disk

8. Cloud based data protections – a new Cloud-based storage that features Encryption

9. Telemetry – provides data collection and upload capabilities for NetBackup and OpsCenter installations.

NetBackup 7.5 Command Additions:

1. nbplupgrade – The nbplupgrade utility upgrades policies from the MS-Windows type to the new VMware or Hyper-V policy type.

2. nbfindfile – lets you search files or folders based on simple search criteria like file name and path.

3. W2Koption – runs the utility program that modifies normal backup and restore behavior.

4. nbdiscover – tests the query rules for automatic selection of VMware virtual machines for backup.

5. nbperfchk – measures the read and write speed of a disk array such as the disks that host deduplicated data

6. nbevingest – for ingesting file system data restored from NetBackup into Enterprise Vault for e-discovery of NetBackup data.

7. vnetd – command allows all socket communication to take place while connecting to a single port

8. nbstl -conflict – is specified, the changes to the SLP described by the other options on the command are submitted for policy/SLP validation

9. nbstlutil -version; -jobid; -policy – nbrbutil command attribute additions.

10. nbholdutil – command runs the utility that places legal holds on backup images. Legal holds provide a mechanism to override existing retention levels.

NetBackup 7.5 Status Code Additions:

1. 1002

2. 1401 – 1426

3. 1450 – 1468

4. 2820

5. 5000 – 5034

- Removed 2,5,6 status codes

Media Server Deduplication Pool (MSDP)

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

HP UX:

/var/adm/syslogs – Systems logs

ioscan -fnC – Command to Scan Devices

/var/spool/cron/crontabs – Cron Tab Location

insf e – Command to add Device file

ps -eaf | grep “bpbkar” – Command to grep

kill -9 PID – To kill Process

/usr/contrib/bin/gunzip ./Managerhpux.tar.gz

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Other OS:

/var/adm/messages – Solaris

Solaris – sgscan, iostat -En

cfgadm -al | grep tape – Solaris tape device scan

HP-UX – ioscan, dmesg, getconf

AIX – ovpassdrivers

Linux – dmesg, lspci, hwinfo

Windows – Device Monitor

TO Scan tape devices in Linux and AIX Server:

lsdev – AIX Server

lspci – Linux Server

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

To Start Image Cleanup manually – bpimage -cleanup -allclients

To Start Catalog Image Consistency – bpdbm -consistency 2

TO check due jobs for a specific time – nbpemreq –predict –date mm/dd/yyyy hh:mm:ss

nbproxy is the one which will interact with bpdbm process

vnetd is the one for outer communications

Unified Logging Logs – nbpem,nbjm,nbgenjob,nbsvcmon,PBX,nbrb,nbemm,bpbrm

Enabling logs on Media Manager logs – /usr/openv/volmgr/vm.conf

Unified Logging Format – productID-originatorID-hostID-date-rotation.log(51216-111-3474696384-060710-0000000051.log)

NBDB – running on Sybase Adaptive Server Anywhere (ASA) 9.0.1

Ping the Sybase Server – nbdbms_start_server -stat(UNIX) and nbdb_ping.exe(WINDOWS)

Sybase Server process – UNIX (NB_dbsrv) and WINDOWS(dbsrv9)

master and media servers are members of an EMM domain – nbemmcmd –listhosts -verbose and nbemmcmd -getemmserver

Media and Device Selection (MDS)

bppolicynew policy_name -sameas template_policy” – Command to copy policies

Displaying Allocations and Orphaned Resources – nbrbutil –dump or nbrbutil –listOrphanedMedia or nbrbutil -listOrphanedDrives or nbrbutil –listOrphanedStus

Catalog Consistency – bpdbm -consistency

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

To Check Devices present:

Solaris – dmesg, sgscan, iostat -En

HP-UX – ioscan, dmesg, getconf

AIX – lsattr, lsdev

Linux – dmesg, lspci, hwinfo

Windows – Device Monitor

HP UX insf e – Creating device file

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Command to change Policy STU

./bpplinfo “POLICYNAME” -modify -residence STUNAME

TO check the drive Status – mt command (Solaris)

To check the drive status – install_path\Volmgr\bin\nt_ttu.exe

Tape Mount and Unmount – tpreq and tpunmont

Device Visibility – vmglob (WINDOWS) scan and sgscan (UNIX only)

Is Server SSO Licensed – nbemmcmd -listhosts -verbose ( Machine Flag Shows it- if its (1=SSO;2=NDMP;4=Remote Client;7= All)

Drive Tape alert codes – (CRT Means Critical)

0x14 – CRT Clean now

0x15 – CRT Clean Periodic

0x1E – CRT Hardware A

0x1F – CRT Hardware B

Device Mapping File – What is this exactly mean?

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

NDMP Configuration:

useradmin user add <ndmpuser> -g “Backup Operators”

ndmpd password ndmpuser

set_ndmp_attr -insert -auth <filer_hostname> ndmpuser <password>

set_ndmp_attr -verify

set_ndmp_attr -probe

NetBackup 7.0 NDMP Command is tpautoconf

Starting from 7.0

tpconfig to add NDMP host

tpautoconf to verify and probe NDMP host

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Making DSU up and down:

./nbdevconfig -changestate -stype NearStore -dp dstu_fs_x_06_1 -state UP

./nbdevconfig -changestate -stype NearStore -dp dstu_fs_x_06_1 -state DOWN

./nbdevquery -listdp -stype NearStore -dp dstu_fs_nt_21_2 -U

./bperror -disk – To Identify all DSU Errors

/usr/openv/netbackup/bin/bp.kill_all

/usr/openv/netbackup/bin/bp.start_all

/usr/openv/netbackup/goodies/netbackup stop

/usr/openv/netbackup/goodies/netbackup start

C:\Veritas\netbackup\bin\bpdown

C:\Veritas\netbackup\bin\bpup

./bpps -x

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Command to Make Drive down and up:

./vmoprcmd -h masterserer -up 18

./vmoprcmd -devmon -dp -h mediaserver

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Editing STU Settings:

./bpsturep -label VTL_C1 -cj 13 – Max Concurrent jobs

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

RobTest:

/usr/openv/volmgr/bin/robtest

init – initialize element status

debug – debug mode

test_ready – Send test unit ready signal

s – status

d – drive

p – Cap(Media Access port)

s – slot

m <from> <to>

mode- display library informations

allow/prevent – allow/prevent media removal

inquiry – display vendor informations

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

About Media:

NetBackup freezes media automatically when read or write errors surpass the threshold within the time window. The default media error threshold is 2. That is, NetBackup freezes media on the third media error in the default time window (12 hours).

NetBackup also freezes a volume if a write failure makes future attempts at positioning the tape unreliable.

Common reasons for write failures are dirty write heads or old media. The reason for the action is logged in the NetBackup error catalog (view the Media Logs report or the All Log Entries report).

You can use the NetBackup nbemmcmd command with the -media_error_threshold and -time_window options to change the default values.

A single command that will return a list of catalog tapes is this:

- /usr/openv/volmgr/bin/vmquery -a -w |awk ‘$28==1 {print $1}’

However, for detailed verification, you could run the following command to generate a list of all tapes:

- /usr/openv/volmgr/bin/vmquery -a > /tmp/vmquery.out

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Start netbackup service on unix/linux:

/etc/init.d/xinetd restart – Linux

/etc/init.d/netbackup start/stop

/usr/openv/netbackup/bin/goodies/netbackup stop/start

/usr/openv/netbackup/bin/goodies/nbclient stop/start

xinetd netbackup stop / start

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

To Check MDS:

/usr/openv/netbackup/bin/admincmd

nbrbutil -dump

-releaseMDS

-listOrphanedMedia

-listOrphanedDrives

-releaseOrphanedMedia [name]

-releaseOrphanedDrive [name]

-releaseDrive

-releaseMedia

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Managing client entries on master:

./bpclient -client CLIENTNAME

-add

-update

-delete

-max_jobs

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

NetBackup Ports:

vnetd – 13724

pbx – 1556

bpcd – 13782 (Master to Client)

bprd – 13720 (Client to Master)

vxat – 2821

vxaz – 4032

bpdbm – 13721

bpjava – 13722

bpjobd – 13723

NDMP – 10000

vmd – 13701

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Command to Force Media Server Restore:

FORCE_RESTORE_MEDIA_SERVER in bp.conf and ./bprdreq -rereadconfig

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Connectivity Commands:

telnet CLIENTNAME 13782

telnet MASTERNAME 13720

bptestbpcd -verbose -client <client-name>

bpclntcmd -pn

bpcoverage -c clientserver

“REQUIRED_INTERFACE” Key need to be added to go Backup LAN Traffic

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

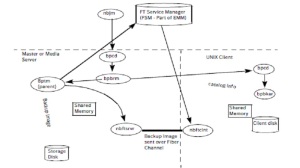

Check NetBackup Backup and Restore flow

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Catalog Backup:

\NetBackupDB

\Conf

Server.conf

databases.conf

\data

NBDB.db

EMM_DATA.db

EMM_INDEX.db

NBDB.log

vxdbms.conf

BMRDB.db

BMRDB.log

BMR_DATA.db

BMR_INDEX.db

\NetBackup\DB

\class

\class_template

\config

\error

\failure_history

\images

\Client_1

\Client_N

\Master

\Media_Server

\jobs

\media

\script

\NetBackup\var

\NetBackup\Vault

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Catalog Commands:

bpcatarc – Processes the output of bpcatlist and backup those images and write backup ID on each image file.

bpcatlist – List the Catalog images

bpcatrm – Processes the output of bpcatlist/bpcatarc and delete the images that have proper image/backup ID.

bpcatres – Processes the output of bpcatlist to restore the selected catalog image .f files.

bpbackupdb – backs up only Image Catalog

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Catalog Recovery Jobs:

Activity Monitor displays multiple jobs

•One Catalog Recovery job

•Multiple (3) Restore jobs

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

DR Mail Contents:

-Server,Date,Policy,Catalog Backup Status, Primary Catalog Medias, Procedure to recover with/without DR file and

With DR File:

1. Install NetBackup.

2. Configure the devices necessary to read the media listed above.

3. Inventory the media.

4. Make sure that the master server can access the attached DR image file.

5. bprecover -wizard or bprecover -r ALL

With out DR File:

1. Install NetBackup.

2. Configure the devices necessary to read the media listed above.

3. Inventory the media.

4. Run:

bpimport -create_db_info [-server name] -id BB0471

5. Go to the following directory to find the DR image file

GS_lfbkp01_catalog_1320120140_FULL:

/usr/openv/netbackup/db/images/lfbkp01/1320000000/tmp

6. Delete the other files in the directory.

7. Open GS_lfbkp01_catalog_1320120140_FULL file and find the BACKUP_ID (for example: lfbkp01_1320120140).

8. Run:

bpimport [-server name] -backupid lfbkp01_1320120140

9. Run:

bprestore -T -w [-L progress_log] -C lfbkp01 -t 35 -p GS_lfbkp01_catalog -X -s 1320120140 -e 1320120140 /

10. Run the BAR user interface to restore the remaining image database if the DR image is a result of an incremental backup.

11. To recover the NetBackup relational database, run:

bprecover -r -nbdb

12. Stop and Start NetBackup

13. Configure the devices if any device has changed since the last backup.

14. To make sure the volume information is updated, inventory the media to update the NetBackup database.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

VMWare VCB Backups:

1. Click Media and Device Management > Credentials > Virtual Machine Servers.

2. Create FlashBackup-WINDOWS Policy

3. Configuration parameters:

Client Name Selection – VM Hostname, VM Displayname, VM UUID

VM backup type – File Level Snapshot, FUll VM,Mapped Full, FUll Backup with file level incremental

Transfer types – san, nbd, nbdssl, try san then nbd, try san then nbdssl

Virtual machine quiesce – Enabled, Disabled

Monolithic export(VCB only) – 2 GB Chungs

Snapshot mount point (VCB only) – D:\mnt

Troubleshooting:

bpfis – snapshot creating and backup

bpvmutil – Policy configuration and restore

bpvmreq – Restore

VM File is located under NetBackup\online_ftl\

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

VTL Details

Model used – VTL1400 and VTL700

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Zone Concept Includes:

Zones – Contains Set of member devices

Default Zone – Contains Set of devices which are not part of zone

Zone Sets – Group of zones that, we can activate and deactive

Active Zone Set – Zone Set which is currently active

1. Zone Creation: Zone and Zone Members

2. Add/Remove members from Zone

3. Save the Zone to Zone Set

4. Active it

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Media Double Import:

Phase 1 of the import is relatively quick, as NetBackup is reading only the tape headers off the media. (bpimport -create_db_info -id <mediaid> -server <master_server_hostname> -L <progress_log>)

Phase 2 however, NetBackup is recreating the files information in the images database (bpimport -Bidfile <filename_with_image_ids> -L <progress_log>)

- Phase 1 of the import is relatively quick, as NetBackup is reading only the tape headers off the media. During Phase 2 however, NetBackup is recreating the files information in the images database. This phase may take some time to complete, depending on the number and size of images being imported. Each image imported will likely take as long, if not longer than, the original backup.

- Once the Phase 2 import is complete, the image has the same retention level as the original backup and a new expiration date calculated as the import date plus the retention period defined currently in Host Properties for the original backup’s retention level. At this point, the image is restorable like the original backup.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Frozen and Suspended Media: Both medias unavail for Backup and avail for restores

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Default Volume Pools:

NetBackup

DataStore

None

Catalog Backup

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Moving EMM DB:

1. Stop NetBackup

2. Start ASA Server Service alone

3. Add necessary enviromental variables – /usr/openv/db/vxdbms_env.sh

4. Start NetBackup Relational Database Administration Tool – /usr/openv/db/bin/dbadm and give password as “nbsql” and select option ‘5’(Move Database)

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

NBU Upgrading sequence when EMM is on different server:

1. EMM Server

2. Master Server

3. Media Server

4. Client servers

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Upgrading NetBackup from 5.x to 6.x:

1. Do Full backup of Master and Media Servers

2. Deactivate Policies and Deactive request deamon (bprdreq –terminate)

3. Make sure no Backups are active

4. Perform Full Catalog Backup

5. For Cluster Systems, Take NetBackup resource to offline and freeze the NBU Group

6. Install New NBU SW

7. unfreeze active node

8. Check nbdb_ping and run create_nbdb if DB is not created

9. Install MP;s

10. Suspend Resource allocation by nbrbutil –suspend

11. nbpushdata—push information from current database files to EMM database

12. Upgrade media server and client servers

13. Reactive Policies and Test the Backup

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Updating UNIX Client:

cd /usr/openv/netbackup/bin

./update_dbclients Oracle -ClientList /tmp/clientlist

./update_clients -ForceInstall -ClientList /tmp/cfile

With NetBackup 7.0 – Agents comes with Client binaries, So no need to separately do it.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

NDMP Types:

Local NDMP – Robot Attached to NDMP Host

Remote NDMP – Robot Attached to Media Server

3-Way NDMP – Robot attached to another NDMP Host

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

non-Direct Access Recovery (DAR) – NDMP

non-Direct Access Recovery (DAR) restore) may take a long time. The default Veritas NetBackup ™ behavior is an 8-hour timeout value when waiting for NDMP operations to complete.

It is possible to modify this timeout value by creating the NDMP_PROGRESS_TIMEOUT in path /usr/openv/netbackup/db/config/

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

bp.conf file Entries:

CLIENT_READ_TIMEOUT

BPBACKUP_POLICY

BPBACKUP_SCHED

BPARCHIVE_POLICY

BPARCHIVE_SCHED

CLIENT_CONNECT_TIMEOUT

Client bp.conf entries:

The following entries can be entered into the bp.conf file on clients:

AUTHENTICATION_DOMAIN

See AUTHENTICATION_DOMAIN bp.conf entry for UNIX servers and clients.

ALLOW_NON_RESERVED_PORTS

See ALLOW_NON_RESERVED_S bp.conf entry for UNIX servers and clients.

BPARCHIVE_POLICY

See BPARCHIVE_POLICY bp.conf entry for UNIX clients.

BPARCHIVE_SCHED

See BPARCHIVE_SCHED bp.conf entry for UNIX clients.

BPBACKUP_POLICY

See BPBACKUP_POLICY bp.conf for UNIX clients.

BPBACKUP_SCHED

See BPBACKUP_SCHED bp.conf entry for UNIX clients.

BUSY_FILE_ACTION

See BUSY_FILE_ACTION bp.conf entry for UNIX clients.

BUSY_FILE_DIRECTORY

See BUSY_FILE_DIRECTORY bp.conf entry for UNIX clients.

BUSY_FILE_NOTIFY_USER

See BUSY_FILE_NOTIFY_USER bp.conf entry for UNIX clients.

BUSY_FILE_PROCESSING

See BUSY_FILE_PROCESSING bp.conf entry for UNIX clients.

CLIENT_NAME

See CLIENT_NAME bp.conf entry.

CLIENT_PORT_WINDOW

See CLIENT_PORT_WINDOW bp.conf entry for UNIX servers and clients.

CLIENT_RESERVED_PORT_WINDOW

See CLIENT_RESERVED_PORT_WINDOW bp.conf entry for UNIX servers and clients.

COMPRESS_SUFFIX

See COMPRESS_SUFFIX bp.conf entry for UNIX clients.

CRYPT_CIPHER

See CRYPT_CIPHER bp.conf entry for UNIX clients.

CRYPT_KIND

See CRYPT_KIND bp.conf entry for UNIX clients.

CRYPT_OPTION

See CRYPT_OPTION bp.conf entry for UNIX clients.

CRYPT_STRENGTH

See CRYPT_STRENGTH bp.conf entry for UNIX clients.

CRYPT_LIBPATH

See CRYPT_LIBPATH bp.conf entry for UNIX clients.

CRYPT_KEYFILE

See CRYPT_KEYFILE bp.conf entry for UNIX clients.

DISALLOW_SERVER_FILE_WRITES

See DISALLOW_SERVER_FILE_WRITES bp.conf entry for UNIX clients.

DO_NOT_RESET_FILE_ACCESS_TIME

See DO_NOT_RESET_FILE_ACCESS_TIME bp.conf entry for UNIX clients.

GENERATE_ENGLISH_LOGS

See GENERATE_ENGLISH_LOGS bp.conf entry for UNIX servers and clients.

IGNORE_XATTR

See IGNORE_XATTR bp.conf entry for UNIX clients.

INFORMIX_HOME

See INFORMIX_HOME bp.conf entry for UNIX clients.

INITIAL_BROWSE

See INITIAL_BROWSE_SEARCH_LIMIT bp.conf entry for UNIX servers and clients.

KEEP_DATABASE_COMM_FILE

See KEEP_DATABASE_COMM_FILE bp.conf entry for UNIX clients.

KEEP_LOGS_DAYS

See KEEP_LOGS_DAYS bp.conf entry for UNIX clients.

LIST_FILES_TIMEOUT

See LIST_FILES_TIMEOUT bp.conf entry for UNIX clients.

LOCKED_FILE_ACTION

See LOCKED_FILE_ACTION bp.conf entry for UNIX clients.

MEDIA_SERVER

See MEDIA_SERVER bp.conf entry for UNIX clients.

MEGABYTES_OF_MEMORY

See MEGABYTES_OF_MEMORY bp.conf entry for UNIX clients.

NFS_ACCESS_TIMEOUT

See NFS_ACCESS_TIMEOUT bp.conf entry for UNIX clients.

RANDOM_PORTS

See RANDOM _PORTS bp.conf entry for UNIX servers and clients.

RESTORE_RETRIES

See RESTORE_RETRIES bp.conf entry for UNIX clients.

SERVER_PORT_WINDOW

See SERVER_PORT_WINDOW bp.conf entry for UNIX servers and clients.

SERVER

See SERVER bp.conf entry for UNIX clients.

SYBASE_HOME

See SYBASE_HOME bp.conf entry for UNIX clients.

USE_CTIME_FOR_INCREMENTALS

See USE_CTIME_FOR_INCREMENTALS bp.conf entry for UNIX clients.

USE_FILE_CHG_LOG

See USE_FILE_CHG_LOG bp.conf entry for UNIX clients.

USE_VXSS

See USE_VXSS bp.conf entry for UNIX servers and clients.

USEMAIL

See USEMAIL bp.conf entry for UNIX clients.

VERBOSE

See VERBOSE bp.conf entry for UNIX servers and clients.

VXSS_NETWORK

See VXSS_NETWORK bp.conf entry for UNIX clients.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Network Buffer Size:

/usr/openv/netbackup/NET_BUFFER_SZ – Backup

/usr/openv/netbackup/NET_BUFFER_SZ_REST – Restore

Default Value – 32 KB

Max Vaule – 128 KB

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

How to update Device Configuration Files:

The files external_robotics.txt and external_types.txt are used by the NetBackup Enterprise Media Manager database to determine which protocols and settings to use to communicate with storage devices. They are also used by the Device Configuration Wizard to automatically configure new devices.

1. Download and extract the new mappings file package to a temporary directory:

tar -xvf Mappings_v1117.tar

This will create three files in the temporary location:

Readme.txt

external_types.txt

external_robotics.txt

2. Copy the external_types.txt file from the temporary location to /usr/openv/var/global on the Master Server or the EMM Server.

cp /temp_dir/external_types.txt /usr/openv/var/global/

(For NetBackup High Availability environments, copy the file to the shared disk.)

3. Copy the external_robotics.txt file from the temporary location to /usr/openv/var/global on the master server, EMM Server, each media server that controls a robot, and each media server from which robot inventories will be run.

cp /temp_dir/external_robotics.txt /usr/openv/var/global/

(For NetBackup High Availability environments, copy the file to the shared disk.)

4. Update the NetBackup Enterprise Media Manager database with the new device mappings version. This only needs to be done once and must be run from the Master Server or the EMM Server. Use the command format below that corresponds to the installed version of NetBackup:

NetBackup 6.5/7.0/7.1/7.5: /usr/openv/volmgr/bin/tpext -loadEMM

NetBackup 6.0: /usr/openv/volmgr/bin/tpext

5. For Media Servers running 6.0_MP4 and earlier, manually update each Media Server with the new device mappings. (On Media Servers running 7.5, 7.1, 7.0, 6.5 or 6.0_MP5 and later, this command is not needed since ltid will update the device mappings when it starts.) This command must be run on each 6.0_MP4 and earlier Media Server that has devices attached::

/usr/openv/volmgr/bin/tpext -get_dev_mappings

6. Restart Device Manager (ltid) on each Media Server.

7. Verify that the version that is now stored in the Enterprise Media Manager database is the same as what is in the file stored on the Media Server:

/usr/openv/volmgr/bin/tpext -get_dev_mappings_ver

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

(Unix)

/usr/openv/db/bin/dbadm

(Windows)

<install path>\Veritas\Netbackup\bin\NbDbAdmin.exe

Example on Unix:

Select #2 “Database Space and Memory Management”

Selected Database: NBDB

Status: UP

Consistency: OK

Space Utilization: 5 %

Database Administration

———————–

1) Select/Restart Database and Change Password

2) Database Space and Memory Management

3) Transaction Log Management

4) Database Validation Check and Rebuild

5) Move Database

6) Unload Database

7) Backup and Restore Database

8) Refresh Database Status

h) Help

q) Quit

ENTER CHOICE: 2

Select #4 “Adjust Memory Settings”

Selected Database: NBDB

Database Space and Memory Management

————————————

1) Report on Database Space

2) Database Reorganize

3) Add Free Space

4) Adjust Memory Settings

h) Help

q) Quit

ENTER CHOICE: 4

Select the appropriate setting based on site size:

(Setting) (Initial) (Minimum) (Maximum)

Current 25M 25M 500M

Small 25M 25M 500M

Medium 200M 200M 750M

Large 500M 500M 1G

Adjust Memory Settings

———————-

1) Small Configuration

2) Medium Configuration

3) Large Configuration

4) Custom

h) Help

q) Quit

WARNING:

NetBackup must be restarted for settings to take effect.

If settings are too large, database server may not start.

ENTER CHOICE:

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Vault:

Vault uses the primary backup image as the source image for the duplication operation. However, Vault duplicates from a nonprimary copy on disk if one exists.

Note: Vault does not select the SLP-managed images that are not lifecycle complete

Vault process:

¦ About choosing backup images

¦ About duplicating backup images

¦ About backing up the NetBackup catalog

¦ About ejecting media

¦ About generating reports

NetBackup Vault interacts with the following NetBackup services and catalogs:

¦ Media Manager, which manages robots and media

¦ The NetBackup catalog and the Media Manager database record of the images that have been vaulted

¦ The Media Manager database information which determines when expired media can be returned to the robot for reuse

¦ The Activity Monitor which displays the status of the Vault job

Vault Configuration:

¦ Create offsite volume pools

¦ Create a Vault catalog backup schedule

¦ Configuring Vault Management Properties

- General tab

¦ The email address for session status notification.

¦ The Email address for eject notification for all profiles.

¦ The sort order for ejected media.

- alternate media server names tab

- Retention Mappings tab

- Reports tab

¦ Configuring robots in Vault

¦ Creating a vault

¦ Creating retention mappings

¦ Creating profiles

Choose backups tab – Enables you to specify the criteria for selecting backup images.

Duplication tab – Enables you to configure duplication of the selected backup images.

Catalog Backup tab – Enables you to choose which catalog backup policy and schedule to use for creating a Vault catalog backup. For efficient disaster recovery, vault a new catalog backup each time you vault data.

Eject tab – Enables you to choose in which off-site volume pools Vault should look for the media you want to eject.

Reports tab – Enables you to choose which reports to generate.

¦ Vault sessions

- Scheduling a Vault session

- Creating a Vault policy

- Running a session manually

Session file – /usr/openv/netbackup/vault/sessions/vault_name/sidxxx

- “vltrun -preview ” – option starts a new vault job, performs a search on the image catalog based on the criteria specified on the profile Choose Backups tab, writes the names of the images to a preview.list file, and then exits. Vault does not act on the images selected.

- “vlteject -preview ” you can preview the media to be ejected

Vault Commands:

vltrun

vltadm

vlteject

vltopmenu

vltinject

vltcontainers – to add ejected medias to containers.

Vault Session files:

eject.list

preview.list

Vault Notify Scripts:

vlt_start_notify

vlt_ejectlist_notify

vlt_starteject_notify

vlt_endeject_notify

vlt_end_notify

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Service Levels:

In the world of data protection, the service level is based on recovery capability—there is no such thing as a “backup service level.” Two key concepts underpin all recovery service levels:

Recovery point objective (RPO)—The most recent state to which an application or server can be recovered in the event of a failure. The RPO is directly linked to the frequency of the protection process; if the application is protected by backups alone, then it means how often a backup is run.

Recovery time objective (RTO)—The time required to recover the application or server to the RPO from the moment that a problem is detected. Many factors influence the RTO—including the provisioning of hardware and the roll-forward time for application transaction logs—but one constant factor is the time needed to restore the data from the backup or snapshot that forms the

RPO.

Data protection system – Tiers:

• Platinum service level—Uses snapshot backup technologies to take frequent backups of mission-critical applications such as order processing systems and transaction processing systems; typical RPO and RTO of one or two hours

• Gold service level—Uses frequent backups, perhaps every six hours or so, for important but non-critical applications such as e-mail, CRM, and HR systems; typical RPO and RTO of 12 hours or less

• Silver service level—daily backup, used to protect non-critical (such as user file and print data) and relatively static data. Typical RPO and RTO of one or two days.

• Fourth Tier – with longer RPO and RTO is added for data in, for example, test and development environments, where data is not critical to the business or can be easily recreated with relatively little time and effort.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

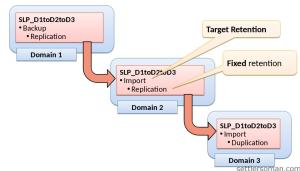

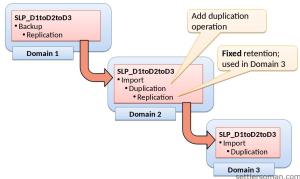

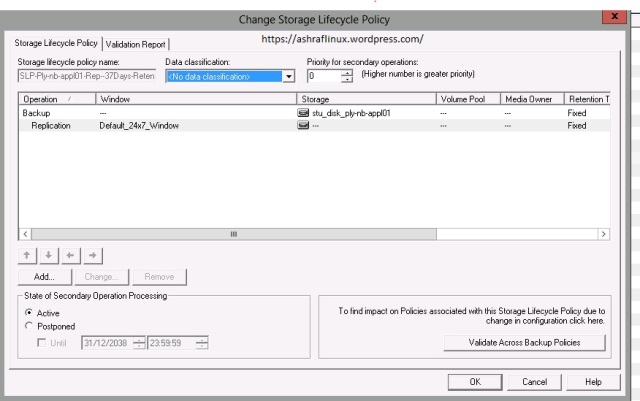

Storage Lifecycle Policy:

Storage Lifecycle Policy consists of two core components:

- a list of storage destinations where copies of the backup images will be stored

- the retention period for each copy

- A Storage Lifecycle Policy is a plan or map of where backup data will be stored and for how long. The Storage Lifecycle Policy determines where the backup is initially written to and where it is subsequently duplicated to. It also automates the duplication process and determines how long the backup data will reside in each location that it is duplicated to.

- A Storage Lifecycle Policy thus replaces both the duplication process and the staging process by introducing a series of storage locations or destinations that use different types of storage with different retention periods and by ensuring that data always exists at the appropriate locations at the appropriate phases of the lifecycle.

- By implementing a Storage Lifecycle Policy you remove the need for both Disk Staging Storage Units and duplication step in Vault profiles by defining all the locations where the data resides and for how long the data is retained in each location in a single policy definition.

- This is an acceptable trade-off, as the value of backup data decreases with time. Backup data is at its most valuable immediately after the backup has been made, and it is at that time that the RTO needs to be kept to a minimum. Once a more recent backup exists, the previous

backup is of less value because it does not offer the best RPO.

- The Data Classification has absolutely no effect at the time of the execution of the backup job. Data Classifications only control the way in which images are expired to create space in Capacity Managed Storage Destinations. Images associated with a lower classification are always expired first.

- In Veritas NetBackup 6.5 Data Classifications are used by Storage Lifecycle Policy to apply a rank to backup images written to the same Capacity Managed Storage Units so that they can be retained for different periods, overriding the traditional “first in first out” model associated with Disk Staging Storage Units;

- Thus when the high water mark is met, the images will be removed—starting with the images with the lowest Data Classification that have passed the try-to-keep time and then working up the data classifications until either the low water mark is met or there are no more images that are past their try-to-keep time.

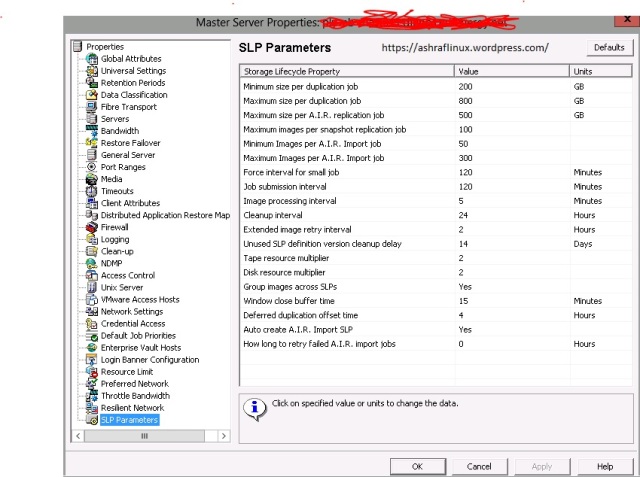

- The size and the frequency of duplication jobs requested by the Storage Lifecycle Policy can be specified in the LIFECYCLE_PARAMETERS file. Five parameters can be specified in this file.

The file is located at: /usr/openv/netbackup/db/config/LIFECYCLE_PARAMETERS

The five parameters are as follows:

• MIN_KB_SIZE_PER_DUPLICATION: This is the size of the minimum duplication batch

(default 8 GB).

• MAX_KB_SIZE_PER_DUPLICATION_JOB: This is the size of the maximum duplication batch

(default 25 GB).

• MAX_MINUTES_TIL_FORCE_SMALL_DUPLICATION_JOB: This represents the time interval

between forcing duplication sessions for small batches (default 30 minutes).

• IMAGE_EXTENDED_RETRY_PERIOD_IN_HOURS: After duplication of an image fails three times,

this is the time interval between subsequent retries (default 2 hours).

• DUPLICATION_SESSION_INTERVAL_MINUTES: This is how often the Storage Lifecycle Policy

service (nbstserv) looks to see if it is time to start a new duplication job(s) (default 5 minutes).

If this file does not exist, the default values will be used.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

To Change path of tape device automatically – ENABLE_AUTO_PATH_CORRECTION

Netbackup Reset Ops center password – vssat resetpasswd –pdrtype ab –domain OpsCenterUsers –prplname admin

Check SAP agent version – /usr/openv/netbackup/dbext/SAP.hpia64.version

Add user account to access Java console – /usr/openv/java/auth.conf

Different Access Groups Which can be specified in auth.conf

AM – Activity Monitor

BPM – Backup Policy Management

JBP – Backup, Archive, and Restore

DM – Device Monitor

MM – Media Management

REP – Reports

SUM – Storage Unit Management

ALL – All

To Check whether disk comes from local or SAN

govan # bdf /usr/openv

Filesystem kbytes used avail %used Mounted on

/dev/vg01/lvol1 1228931072 830851403 373206297 69% /usr/openv

govan # vgdisplay -v vg01

— Volume groups —

VG Name /dev/vg01

VG Write Access read/write

VG Status available

Max LV 2047

Cur LV 2

Open LV 2

Max PV 2048

Cur PV 5

Act PV 5

Max PE per PV 278864

VGDA 10

PE Size (Mbytes) 16

Total PE 81330

Alloc PE 75264

Free PE 6066

Total PVG 0

Total Spare PVs 0

Total Spare PVs in use 0

VG Version 2.1

VG Max Size 4461824m

VG Max Extents 278864

— Logical volumes —

LV Name /dev/vg01/lvol1

LV Status available/syncd

LV Size (Mbytes) 1200128

Current LE 75008

Allocated PE 75008

Used PV 5

LV Name /dev/vg01/lvol3

LV Status available/syncd

LV Size (Mbytes) 4096

Current LE 256

Allocated PE 256

Used PV 1

govan # powermt display dev=all

Pseudo name=disk68

Symmetrix ID=000195700850

Logical device ID=092E

state=alive; policy=SymmOpt; priority=0; queued-IOs=0;

==============================================================================

————— Host ————— – Stor – — I/O Path — — Stats —

### HW Path I/O Paths Interf. Mode State Q-IOs Errors

==============================================================================

4 0/2/1/0/4/0.0x50000973000d4954.0x4001000000000000 c31t0d1 FA 6fA active alive 0 0

6 0/5/1/0/4/0.0x50000973000d4968.0x4001000000000000 c33t0d1 FA 11fA active alive 0 1

SAP Manual Backup – su – orat08 -c brbackup -u / -c -p /usr/openv/netbackup/ext/db_ext/sap/scripts/T08/initT08.sap.online

Change Tape Drive type:

tpconfig -update -drive 7 -type hcart

To update NetBackup Inventory:

vmupdate -rt tld -rn 0 -rh glidden -empty_map -interactive

To mount a media:

tpreq -m <media id> -f /tmp/TAPE

To unmount a media:

tpunmount -f /tmp/TAPE

To update media type and barcode:

vmchange -new_mt <media_type> -m <media_id>

vmchange -barcode DD2107 -m DD2107-rt TLD

If using the ALL_LOCAL_DRIVES directive when setting up the policy for the client behind the firewall, then the following port will also need to be opened:

Master >> Client 13782 (bpcd)

Client >> Master 13724 (vnetd)

If the client needs to run user backups/restores, then the following port will also need to be opened:

Client >> Master

13720 (bprd)

If database backups are done from the client, then the following ports will also need to be opened:

Client >> Master

13720 (bprd)

13724 (vnetd)

Master >> Client

13782 (bpcd)

If using NetBackup enhanced authentication, you will also need to open:

Master >> Client

13783 (vopied)

To check the NDMP Tape drives connected to a NetApp Filer:

lhotse1> sysconfig -t

To check/kill the NDMP Sessions on a NetApp Filer:

system>ndmpd status [session]

system>ndmpd status all

system>ndmpd kill session_number

system>ndmpd kill all

To Add NDMP Host to NetBackup Configuration:

Windows: <install dir>\Veritas\Volmgr\bin\tpconfig -add -nh ndmp_hostname -user_id userID -password passwd

UNIX/Linux: /usr/openv/volmgr/bin/tpconfig -add -nh ndmp_hostname -user_id userID -password passwd

NDMP Backup/Restore Failure error codes:

NDMP_DEVICE_BUSY_ERR — The specified tape drive is already in use.

NDMP_DEVICE_OPENED_ERR — NDMP is attempting to open more connections than are allowed.

NDMP_NOT_AUTHORIZED_ERR — This error is returned if an NDMP request is issued before the connection has been authenticated.

NDMP_PERMISSIONS_ERR — The connection has been authenticated, but the credentials used lack the necessary permissions.

NDMP_DEV_NOT_OPEN_ERR — An attempt was made to access a device without first opening a connection to it.

NDMP_IO_ERR — The tape drive has returned an I/O error.

NDMP_TIMEOUT_ERR — The current operation has timed out.

NDMP_ILLEGAL_ARGS_ERR — The request contains an illegal argument.

NDMP_NO_TAPE_LOADED_ERR — There is no tape in the drive.

NDMP_WRITE_PROTECT_ERR — The tape in the drive is write protected.

NDMP_EOF_ERR — An unexpected end of file was encountered.

NDMP_EOM_ERR — The tape has run out of space (the End of Media Mark was encountered).

NDMP_FILE_NOT_FOUND_ERR — The requested file was not found.

NDMP_BAD_FILE_ERR — An error was caused by a bad file descriptor.

NDMP_NO_DEVICE_ERR — A request was made to a tape drive that does not exist.

NDMP_NO_BUS_ERR — The specified SCSI bus cannot be found.

NDMP_NOT_SUPPORTED_ERR — Either the NDMP protocol is not supported, or only a subset of the protocol is supported.

NDMP_XDR_DECODE_ERR — A message cannot be decoded.

NDMP_ILLEGAL_STATE_ERR — A request cannot be processed in its current state.

NDMP_UNDEFINED_ERR — A nonspecific error has occurred.

NDMP_XDR_ENCODE_ERR — There was an error encoding a reply message.

NDMP_NO_MEM_ERR — A memory allocation error.

NetBackup GUI reports and its corresponding command line informations:

Backup Status Report

The Backup Status report shows status and error information on jobs completed within the specified time period. If an error has occurred, a short explanation of the error is included.

- cd /usr/openv/netbackup/bin/admincmd

- ./bperror -U -backstat -s info [-d <start_date> <start_time> -e <end_date> <end_time>]

Client Backups Report

The Client Backups report shows detailed information on backups completed within the specified time period.

- cd /usr/openv/netbackup/bin/admincmd

- ./bpimagelist -U [-A|-client name] [-d <start_date> <start_time> -e <end_date> <end_time>]

Problems Report

The Problems report lists the problems that the server has logged during the specified time period. This information is a subset of the information you get from the All Log Entries report.

- cd /usr/openv/netbackup/bin/admincmd

- ./bperror -U -problems [-d <start_date> <start_time> -e <end_date> <end_time>]

All Log Entries Report

The All Log Entries report lists all log entries for the specified time period. This report includes the information from the Problems report and Media Log Entries report. This report also shows the transfer rate, which is useful in determining and predicting rates and backup times for future backups (the transfer rate does not appear for multiplexed backups).

- cd /usr/openv/netbackup/bin/admincmd

- ./bperror -U -all [-d <start_date> <start_time> -e <end_date> <end_time>]

Media List Report

The Media Lists report shows information for volumes that have been allocated for backups. This report does not show media for Disk type storage units or for backups of the NetBackup catalogs.

- cd /usr/openv/netbackup/bin/admincmd

- ./bpmedialist -U -mlist [-m <media_id>

Media Contents Report

The Media Contents report shows the contents of a volume as read directly from the media header and backup headers. This report lists the backup IDs (not each individual file) that are on a single volume. If a tape has to be mounted, there will be a longer delay before the report appears.

- cd /usr/openv/netbackup/bin/admincmd

- ./bpmedialist -U -mcontents [-m <media_id>]

Images on Media Report

The Images on Media report lists the contents of the media as recorded in the NetBackup image catalog. You can generate this report for any type of media (including disk) and filter it according to client, media ID, or path.

- cd /usr/openv/netbackup/bin/admincmd

- ./bpimmedia -U [-client <client_name>] [-mediaid <media_id>]

Media Log Entries Report

The Media Logs report shows media errors or informational messages that are recorded in the NetBackup error catalog. This information also appears in the All Log Entries report.

- cd /usr/openv/netbackup/bin/admincmd

- ./bperror -U -media [-d <start_date> <start_time> -e <end_date> <end_time>]

Media Summary Report

The Media Summary report summarizes active and non-active volumes for the specified server according to expiration date. It also shows how many volumes are at each retention level. In verbose mode, the report shows each media ID and its expiration date.

- cd /usr/openv/netbackup/bin/admincmd

- ./bpmedialist -summary

Media Written Report

The Media Written report identifies volumes that were used for backups within the specified time period.

- cd /usr/openv/netbackup/bin/admincmd

- ./bpimagelist -A -media [-d <start_date> <start_time> -e <end_date> <end_time>]

Volume detail (vmquery)

There is no NetBackup GUI equivalent for the Volume detail report. This is a command line method to get details for all tapes or a specific tape. This can be used as a complement to other Media reports.

- cd /usr/openv/volmgr/bin

- ./vmquery -a

or

- ./vmquery -m <media_id>

To force media server read request to alternative host:

Add FORCE_RESTORE_MEDIA_SERVER to the /usr/openv/netbackup/bp.conf file in the following format:

FORCE_RESTORE_MEDIA_SERVER = from_host to_host

FORCE_RESTORE_MEDIA_SERVER = weber-bk jummy-bk

To check the HP-UX Tape Drive Issues:

galahad-bk # fcmsutil /dev/fcd5

Vendor ID is = 0x001077

Device ID is = 0x002422

PCI Sub-system Vendor ID is = 0x00103c

PCI Sub-system ID is = 0x0012df

PCI Mode = PCI-X 133 MHz

ISP Code version = 4.4.4

ISP Chip version = 3

Topology = PTTOPT_FABRIC

Link Speed = 4Gb

Local N_Port_id is = 0x540223

Previous N_Port_id is = 0x540223

N_Port Node World Wide Name = 0x50014380017af5f7

N_Port Port World Wide Name = 0x50014380017af5f6

Switch Port World Wide Name = 0x21e7000573b4b280

Switch Node World Wide Name = 0x2002000573b4b281

Driver state = ONLINE

Hardware Path is = 0/2/1/0/4/1

Maximum Frame Size = 2048

Driver-Firmware Dump Available = NO

Driver-Firmware Dump Timestamp = N/A

Driver Version = @(#) libfcd.a HP Fibre Channel ISP 23xx & 24xx Driver B.11.23.0909 /ux/core/isu/FCD/kern/src/common/wsio/fcd_init.c:Jun 5 2009,11:15:21

galahad-bk #galahad-bk # ioscan -f | grep ‘tape’ | grep ‘HP’

tape 46 0/1/1/0/4/0.84.2.255.4.4.0 stape CLAIMED DEVICE HP Ultrium 4-SCSI

tape 44 0/1/1/0/4/0.84.2.255.6.10.0 stape CLAIMED DEVICE HP Ultrium 4-SCSI

tape 26 0/1/2/0/4/0.228.0.255.4.5.0 stape CLAIMED DEVICE HP Ultrium 4-SCSI

tape 56 0/2/1/0/4/1.84.2.255.4.5.0 stape CLAIMED DEVICE HP Ultrium 4-SCSI

tape 58 0/2/1/0/4/1.84.2.255.6.6.0 stape CLAIMED DEVICE HP Ultrium 4-SCSI

tape 25 0/5/1/0/4/1.228.0.255.4.6.0 stape CLAIMED DEVICE HP Ultrium 4-SCSI

galahad-bk #

galahad-bk # ioscan -fnC tape | grep ‘NO_HW’

glidden # ioscan -fnuC tape

Class I H/W Path Driver S/W State H/W Type Description

==================================================================

tape 12 0/1/1/0/4/1.220.1.255.2.9.0 stape CLAIMED DEVICE HP Ultrium 4-SCSI

/dev/rmt/12m /dev/rmt/12mn /dev/rmt/c19t9d0BEST /dev/rmt/c19t9d0BESTn

/dev/rmt/12mb /dev/rmt/12mnb /dev/rmt/c19t9d0BESTb /dev/rmt/c19t9d0BESTnb

tape 13 0/1/1/0/4/1.220.1.255.3.5.0 stape NO_HW DEVICE HP Ultrium 4-SCSI

/dev/rmt/13m /dev/rmt/13mn /dev/rmt/c20t5d0BEST /dev/rmt/c20t5d0BESTn

/dev/rmt/13mb /dev/rmt/13mnb /dev/rmt/c20t5d0BESTb /dev/rmt/c20t5d0BESTnb

tape 8 0/2/1/0/4/1.220.1.255.3.1.0 stape NO_HW DEVICE HP Ultrium 4-SCSI

/dev/rmt/8m /dev/rmt/8mn /dev/rmt/c16t1d0BEST /dev/rmt/c16t1d0BESTn

/dev/rmt/8mb /dev/rmt/8mnb /dev/rmt/c16t1d0BESTb /dev/rmt/c16t1d0BESTnb

tape 9 0/2/1/0/4/1.220.1.255.3.2.0 stape NO_HW DEVICE HP Ultrium 4-SCSI

/dev/rmt/9m /dev/rmt/9mn /dev/rmt/c16t2d0BEST /dev/rmt/c16t2d0BESTn

/dev/rmt/9mb /dev/rmt/9mnb /dev/rmt/c16t2d0BESTb /dev/rmt/c16t2d0BESTnb

tape 10 0/5/1/0/4/0.220.1.255.3.3.0 stape NO_HW DEVICE HP Ultrium 4-SCSI

/dev/rmt/10m /dev/rmt/10mn /dev/rmt/c18t3d0BEST /dev/rmt/c18t3d0BESTn

/dev/rmt/10mb /dev/rmt/10mnb /dev/rmt/c18t3d0BESTb /dev/rmt/c18t3d0BESTnb

tape 11 0/5/1/0/4/0.220.1.255.3.4.0 stape NO_HW DEVICE HP Ultrium 4-SCSI

/dev/rmt/11m /dev/rmt/11mn /dev/rmt/c18t4d0BEST /dev/rmt/c18t4d0BESTn

/dev/rmt/11mb /dev/rmt/11mnb /dev/rmt/c18t4d0BESTb /dev/rmt/c18t4d0BESTnb

glidden #

To check the Linux Tape Drive Issues:

dcs-lnx-nbu-med2 # lsmod |grep st

st 72933 14

ql2300_stub 372724 0

usb_storage 122529 0

scsi_mod 196953 9 p,scsi_dh,st,sg,usb_storage,qla2xxx,scsi_transport_fc,cciss,sd_mod

dcs-lnx-nbu-med2 # lsmod |grep sg

sg 70377 0

scsi_mod 196953 9 emcp,scsi_dh,st,sg,usb_storage,qla2xxx,scsi_transport_fc,cciss,sd_mod

dcs-lnx-nbu-med2 #

dcs-lnx-nbu-med2 # cat drive_TLD20_Drive112

MODE = 2

TIME = 1353318700

MASTER = glidden

SR_KEY = 0 1

PATH = /dev/nst18

REQID = -1350811059

ALOCID = 50198872

RBID = {B711FD22-322E-11E2-9F84-001E0BFDD20E}

PID = 11369

FILE = /usr/openv/netbackup/db/media/tpreq/drive_TLD20_Drive112

DONE

_Drive112

DONE

dcs-lnx-nbu-med2 # ls -ltr /usr/openv/netbackup/db/media/tpreq/drive_TLD20_Drive112

ls: /usr/openv/netbackup/db/media/tpreq/drive_TLD20_Drive112: No such file or directory

dcs-lnx-nbu-med2 # ls -ltr /dev/nst19

crw——- 1 root disk 9, 147 Oct 11 07:57 /dev/nst19

dcs-lnx-nbu-med2 # ls -ltr /dev/nst20

crw——- 1 root disk 9, 148 Oct 11 07:57 /dev/nst20

dcs-lnx-nbu-med2 # dmesg

Sticky Bit Permissions to let everyone to run commands:

-r-sr-sr-t

Small (s) – Execute

Cap (S) – No Execute

First s – SUID – 4

Second s – SGID – 2

t – 1

Total – 7

-r-sr-sr-t

r – 4

s(x) – 1

Total 555

volt# ls -ltr /usr/openv/volmgr/bin/vmchange

-r-sr-sr-t 1 root bin 621368 Feb 3 2011 /usr/openv/volmgr/bin/vmchange

volt# ls -ltr /usr/openv/netbackup/bin/bpadm

-rwxr-xr-x 1 root bin 452928 Feb 3 2011 /usr/openv/netbackup/bin/bpadm

volt# chmod 7555 /usr/openv/netbackup/bin/bpadm

volt# ls -ltr /usr/openv/netbackup/bin/bpadm

-r-sr-sr-t 1 root bin 452928 Feb 3 2011 /usr/openv/netbackup/bin/bpadm

volt#

To Start TSm Client Services:

[glerpd42]# pwd

/etc

[glerpd42]# cat inittab |grep dsm

tsm1::once:/usr/bin/dsmc sched -server=gltsmd12 >/dev/null 2>&1 #TSM Scheduler.

[glerpd42]#

[glerpd42]# nohup /usr/bin/dsmc sched -server=gltsmd12 >/dev/null 2>&1 &

[1] 35651596

[glerpd42]#

Command to grep client name in Avamar

mccli client show –recursive=true |grep -i ‘DCA-CX-86’ |awk ‘{print $2}’

To Add NFS export on Linux Servers:

–>Edit /etc/exports and place line as below

/usr/openv *(ro,sync)

- – Means all server can mount this

ro – readonly

rw – read and write

–>exportfs -a

this command reread export file completly(exportfs -r – will read only modified lines)

—>exportfs

this commmand enables that

exportfs

/usr/openv <world>

–> to rerun on NFS

chkconfig nfs on

To check memory usage in HP UX Server – Command:

swapinfo -tam

To stop the pbx_exchange daemon:

/opt/VRTSpbx/bin/vxpbx_exchanged stop

To start the pbx_exchange daemon:

/opt/VRTSpbx/bin/vxpbx_exchanged start

Cron file timing: – Crontab

- * * * * command to be executed

- – – – –

| | | | |

| | | | +—– day of week (0 – 6) (Sunday=0)

| | | +——- month (1 – 12)

| | +——— day of month (1 – 31)

| +———– hour (0 – 23)

+————- min (0 – 59)

tar and gzip commands:

tar cvf Dlinklogs_INC1185457262. /tmp/Dlinklogs_INC1185457262/

gzip Dlinklogs_INC1185457262.tar

zcat file.tar.Z | tar xvf – – un compress tar.z file

gunzip -c NetBackup_7.0_Solaris_Sparc64_GA.tar.gz | tar xvf –

du -skh /usr/openv/netbackup/db/* – Command to get Folder/Directory Size

du -sk /usr/openv/netbackup/db/images/* > /tmp/catsize.out

##########################################

Sybase SQL Anywhere server management

Upon startup, the Sybase SQL Anywhere server uses the SQL Anywhere daemon to set the server parameters in the server.conf file. Then, the daemon starts the databases that are indicated in the databases.conf file.

¦ /usr/openv/db/bin/nbdbms_start_server ndbms_start_server – Starts the SQL Anywhere server if no option is specified.

¦ /usr/openv/db/bin/nbdbms_start_server -stop -f – Stops the server; -f forces a shutdown with active connections.

¦ /usr/openv/db/bin/nbdbms_start_server -stat – The -stat option tells whether the server is up or down:

SQL Anywhere Server Ping Utility Version 11.0.1.2044 Ping server successful.

##########################################

NetBackup catalog resides on the NetBackup master server consists of following:

• The image database. The image database contains information about the data that has been backed up. It is the largest part of the catalog.

• NetBackup data that is stored in relational database files. The data includes media and volume data describing media usage and volume

information, which is used during the backups.

• NetBackup configuration files. The configuration files (databases.conf and server.conf) are flat files that contain instructions for the SQL Anywhere daemon.

IMAGE DATABASE:

• Image database is located at /usr/openv/netbackup/db/images and contains the following files:

¦ Image files (files that store only backup set summary information)

- Each image file is an ASCII file, generally less than 1 kilobyte in size. An image file contains only backup set summary information. For example, the backup ID, the backup type, the expiration date, fragment information, and disaster recovery information.

¦ Image .f files (files that store the detailed information of each file backup)

- The binary catalog can contain one or more image .f files. This type of file is also referred to as a files-file. The image .f file may be large because it contains the detailed backup selection list for each file backup. Generally, image files range in size from 1 kilobyte to 10 gigabytes.

(*) Image .f file single file layout

NetBackup stores file information in a single image.f file if the information for the catalog is less than 4 megabytes.

(*) Image .f file multiple file layout

When the file information for one catalog backup is greater than 4 megabytes, the information is stored in multiple .f files: one main image .f file plus nine additional .f files. Separating the additional .f files from the image .f file and storing the files in the catstore directory improves performance while writing to the catalog.

RELATIONAL DATABASE:

NetBackup installs Sybase SQL Anywhere during the master server installation as a private, non-shared server for the NetBackup database. Also known as the Enterprise Media Manager (EMM) database, the NetBackup database (NBDB) contains information about volumes and the robots and drives that are in NetBackup storage units.

¦ Database files

¦ /usr/openv/db/data/BMRDB.db (if BMR is installed)

¦ /usr/openv/db/data/BMRDB.log (if BMR is installed)

¦ /usr/openv/db/data/BMR_DATA.db (if BMR is installed)

¦ /usr/openv/db/data/BMR_INDEX.db (if BMR is installed)

¦ /usr/openv/db/data/DARS_DATA.db

¦ /usr/openv/db/data/DARS_INDEX.db

¦ /usr/openv/db/data/DBM_DATA.db

¦ /usr/openv/db/data/DBM_INDEX.db

¦ /usr/openv/db/data/NBDB.db

¦ /usr/openv/db/data/EMM_DATA.db

¦ /usr/openv/db/data/EMM_INDEX.db

¦ /usr/openv/db/data/NBDB.log

CONFIGURATION FILES:

¦ /usr/openv/db/data/vxdbms.conf

¦ /usr/openv/var/global/server.conf

¦ /usr/openv/var/global/databases.conf

##########################################

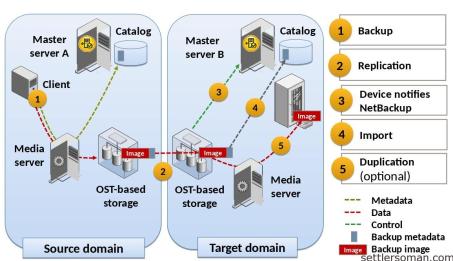

AIR – Auto Image Replication

Auto Image Replication is to create off-site copies of mission critical backups to protect against site loss

Step 1 – The backup is written to disk storage in the source domain using a backup policy with an SLP configured for Auto Image Replication. When the backup completes the catalog data it generates is appended to the end of the backup.

Step 2 – the backup is duplicated to the target domain across the WAN (or LAN)

Step 3 – the storage device in the target domain alerts the target master server to the fact that a backup has been duplicated to it. This triggers the receiving SLP to run a “fast import” operation in which the catalog data transferred from the source domain is added to the target domain’s catalog.

Step 4 – the receiving SLP in the target domain can now duplicate the received backup to any desired location for storage – such as creating a tape for long term retention.

##########################################

RIP – Real time protection

RIP is used to create duplicate writes of data to two destinations – so it is “Real Time” copy. AIR is used with a Storage Lifecycle Policy to replicate data that has been written to primary storage and it works “after” the data is written – so there can be a lag time (backlog) of hours or even days depending on the environment and bandwidth.

So in the end, you get two copies of the data, but RTP is done immediately, AIR is done when there are cycles in the infrastructure.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Designing your backup system:

The planning and configuration examples that follow are based on standard and ideal calculations. Your numbers can differ based on your particular environment, data, and compression rates.

After an analysis of your backup requirements, you can begin designing your backup system. The table below summarizes the steps to completing a design for your backup system.

Step 1 : Calculate the required data transfer rate for your backups. Calculate the rate of transfer your system must achieve to complete a backup of all your data in the time available.

Step 2 : Calculate how long it takes to back up to tape or disk. Determine what kind of tape or disk technology meets your needs.

Step 3 : Calculate the required number of tape drives. Determine how many tape drives are needed.

Step 4 : Calculate the required data transfer rate for your network(s). For backups over a network, you must move data from your client(s) to your media server(s) fast enough to finish backups within your backup window.

Step 5 : Calculate the size of your NetBackup image database. Determine how much disk space is needed to store your NetBackup image database.

Step 6 : Calculate the size of the NetBackup relational database (NBDB). Determine the space required for NBDB.

Step 7 : Calculate media needed for full and incremental backups. Determine how many tapes are needed to store and retrieve your backups.

Step 8 : Calculate the size of the tape library needed to store your backups. Determine how many robotic library tape slots are needed to store all your backups.

Step 9 : Design your master server. Use the previous calculations to design and configure a master server.

Step 10 : Estimate the number of master servers needed.

Step 11 : Estimate the number of media servers needed.

Step 12 : Design your OpsCenter server.

Step 14 : Review a summary of these steps.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

TCP/IP Network Buffer Size:

/usr/openv/netbackup/NET_BUFFER_SZ is a file containing a number indicating the TCP/IP socket buffer size that should be used for data transfers between the NetBackup media server and its clients. If the file does not exist, the default value used is 32032 bytes

- echo “262144” > /usr/openv/netbackup/NET_BUFFER_SZ

- cat /usr/openv/netbackup/NET_BUFFER_SZ

262144

#

NET_BUFFER_SZ_REST – is for Network Restores

Data Buffer Size and Number of Data Buffers:

The NetBackup media server uses shared memory to buffer data between the network and the tape drive (or between the disk and the tape drive if the NetBackup media server and client are the same system). By default, NetBackup uses a default value of 8 x 32KB shared memory buffers for non-multiplexed backups and 4 x 64KB for a multiplexed backup.

These buffers can be configured by creating the files /usr/openv/netbackup/db/config/SIZE_DATA_BUFFERS and /usr/openv/netbackup/db/config/NUMBER_DATA_BUFFERS on the NetBackup media server.

The SIZE_DATA_BUFFERS file should contain a single line specifying the data buffer size in bytes in decimal format.

The NUMBER_DATA_BUFFERS file should contain a single line specifying the number of data buffers in decimal format.

IMPORTANT: Because the data buffer size equals the tape I/O size, the value specified in SIZE_DATA_BUFFERS must not exceed the maximum tape I/O size supported by the tape drive or operating system. This is usually 256 KB or 128 KB.

- echo “262144” > /usr/openv/netbackup/db/config/SIZE_DATA_BUFFERS

- echo “16” > /usr/openv/netbackup/db/config/NUMBER_DATA_BUFFERS

- cat /usr/openv/netbackup/db/config/SIZE_DATA_BUFFERS

262144

- cat /usr/openv/netbackup/db/config/NUMBER_DATA_BUFFERS

16

#

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Generate Test data for backup, duplications, verifies, restores, etc,.

A need was identified to provide a means of generating test data to process through NetBackup.

- This data should be: Repeatable and controllable.

- As ‘light-weight’ as possible during generation.

- Indistinguishable from regular data, to allow for further processing, such as duplications, verifies, restores, etc.

- A set of file list directives are available to control the size, number, compressibility, and delivery rate of data through a Standard NetBackup policy.

- These directives can be used to create data in any profile that is desired, with little to no impact on the client machine.

- The client’s network will be impacted, just like a regular backup, unless the client is also the media server.

- The images that are created are standard images, and can be verified, imported, duplicated and restored.

- UNIX/Linux clients only, in a Standard NetBackup policy.

- Client encryption may be used, but not client compression.

- Since no actual data is being used for the backup, restores will not produce any files.

- This will generate real images, using up storage space, and should be dealt with accordingly, i.e. expired, removed, etc.

NEW_STREAM

GEN_DATA

GEN_KBSIZE=100

GEN_MAXFILES=100

GEN_PERCENT_RANDOM=50

NEW_STREAM

GEN_DATA

GEN_KBSIZE=100

GEN_MAXFILES=100

GEN_PERCENT_RANDOM=60

GEN_FILENAME_OFFSET=100

NEW_STREAM

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Adjusting Batch Size for sending MetaData to the Catalog:

• Can be used to tune problems with backing up file systems with many files and also file adds into catalog exceeding bpbrm timeout

•/usr/openv/netbackup/MAX_FILES_PER_ADD – affects all backups, default is 5,000

•/usr/openv/netbackup/FBU_MAX_FILES_PER_ADD – affects FlashBackup, default is 95,000

•/usr/openv/netbackup/CAT_BU_MAX_FILES_PER_ADD – affects catalog backups, default is maximum allowed 100,000

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

SAN Client Tuning:

• On FT media servers, using a NUMBER_DATA_BUFFERS above 16 may not yield performance improvements and may cause backup failures.

• Use NUMBER_DATA_BUFFERS_FT to set this value for just FT backups. The default is 16 for tape and 12 for disk.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Tuning nbrb for Resource Utilization:

• The NetBackup Resource Broker handles granting resources to backup, restore and duplications.

• nbrb.conf settings are moved into EMM in 7.1 and above and nbrbutil –listSettings is used to view them.

• These setting should be reviewed after upgrading to 7.1, paying special attention to RESPECT_REQUEST_PRIORITY and DO_INTERMITTENT_UNLOADS .

• BREAK_EVAL_ON_DEMAND is a relatively new setting and should also be considered

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Tuning server.conf Part I

c – Indicates the initial memory that is reserved for caching database pages and other server information

ch – Indicates the maximum cache size, as a limit to automatic cache growth

cl – Indicates the minimum cache size, as a limit to automatic cache resizing.

govan # cat /usr/openv/var/global/server.conf

-n NB_govan-bk

-x tcpip(LocalOnly=YES;ServerPort=13785) -gp 4096 -gd DBA -gk DBA -gl DBA -ti 0 -c 500M -ch 1G -cl 500M -zl -os 1M -o /usr/openv/db//log/server.log

-ud

Recommended change:

govan # cat /usr/openv/var/global/server.conf

-n NB_govan-bk

-x tcpip(LocalOnly=YES;ServerPort=13785) -gp 4096 -gd DBA -gk DBA -gl DBA -ti 0 –gn 40 -c 1G -ch 4G -cl 1G -zl -os 1M -o /usr/openv/db//log/server.log

-ud

Tuning server.conf Part II

gn – Indicates the number of requests the database server can handle at one time. This parameter limits the number of threads upon startup.

Tuning server.conf Part III

m – truncate and commits the NBDB tlog

- The transactions logs in nbdb.log can grow quite large and eventually cause issues with NBU operations. This typically only happens if catalog backups are not performed for an extended period but can also happen if the system is very busy.

- To prevent this transaction log growth a –m option can be added at the end of server.conf after the –ud option on the last line. This automatically truncates and commits the tlogs when a checkpoint is done many times a day.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Tuning emm.conf

• UNIX: /usr/openv/var/global/emm.conf is the configuration file used by nbemm the Enterprise Media Manager.

• With the default settings in emm.conf (or with the file not present) even a number of admins opening the Device Manager in the GUI or running commands can exceed the number of connections. The default for DB browse connections is only 3 and DB connections is 4!

• For large environments the following settings are recommended as a minimum

NUM_DB_BROWSE_CONNECTIONS=20

NUM_DB_CONNECTIONS=21

NUM_ORB_THREADS=31

• This makes it important that the the emm db in /usr/openv/db/data is on really fast disk and often times it is advisable to have it on separate disk from the image catalog and any logging.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Disk Layout Considerations

• Setting up separate file systems/disk spindles for the following components will improve the performance on large masters.

1. Unified logs

2. Catalog flat file components (in particular the image database)

3. Catalog relational database data files

4. Catalog relational database index files

5. Catalog relational database transaction logs

• Consider SSD for relational databases which are relatively small

• Put databases and log files on a Raid protected file system with the right balance of performance and redundancy.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Linux Media Server Fine tuning:

• Increase the number of file descriptors to at least 8192 and again 65536 is recommended with 7.5. Use ulimit –a to determine the current limit. This can be raised in /etc/security/limits.conf

- hard nofile 65536 (can be tuned to unlimited as well)

- soft nofile 65536

• Increase the amount of shared memory available for NBU, especially on media servers by editing /etc/sysctl.conf and adding or modifying

kernel.shmmax= half or more of physical RAM.

• These minimums are also required for other kernel parameters, often customers with busy master/media servers end up with higher values.

Message Queues Semaphores

msgmax=65536 semmsl = 300

msgmnb=65536 semmns = 1024

Msgmni=16384 semopm = 32

semmni = 1024

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Bare Metal Restore – How to

1. BMR master setup – creates BMRDB – “bmrsetupmaster”

2. Configure Backup policy with BMR option and complete the backup

3. Configure Boot Server – can be configured on any client machine.

“bmrsetupboot -register”

4. Create SRT – Shared resource tree is the minimal bootable environment needed. its basically Windows P OS. Only one needed per bit version.

- wim – image format – bootable image get created

- you also add NBU package to build into the wim image

- you can also add Maintenance pack details(7.1.0.1) by going through modify SRT.

5. Booting the SRT – using PXE boot or CD boot

To configure PXE, PXE configuration wizard

6. In case you do DSR(Dissimilar Server restore), you need to run discover step to discover the destination server hardware details.

- Create a editable copy of the current configuration.

- change the destination IP.

- start – “prepare to discover” with keeping a name to it.

- boot the destination host with PBX boot/CD image, destination client will start sending its configuration details to BMR server.

- once this is done, you will be seeing the configuration of destination server in the discovered configuration.

- Now change the discovered configuration, initialize the device drivers, network interfaces and volumes. this will remove all old configuration of old server and add only things needed for destination server. change disk configuration as needed

7. Start “Prepare to restore state”

reboot the destination server for restore

establish restore environment

partition disks

format disks

restore files

finalize restore

8. After restore completes and reboot

cleanup process

install NIC driver

detecting and completing DSR

remove temp files

update system state

check for external procedure

finalize restore

manually import foreign disks

Processes involved in BMR

bmrd – Bare Metal Restore master

bmrbd – BMR boot server

bmrsavecfg – Bare Metal Restore the agent that runs on client systems, collects the client configuration, and saves the client configuration to the master server.

bmrc – Bare Metal Restore the utility that clients use to communicate with the BMR master server during a restore. Runs on the restoring client.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

The NOexpire touch file is not intended for long term purposes. It is recommended only to use the NOexpire touch file for a short time period as in a maintenance window. There is no published Symantec documentation recommending to keep this touch file in place for extended periods of time. The best solution to prevent images from expiring is to use the bpexpdate command to extend the image expiration date(s) prior to the image’s expiration date.

The NOexpire touch file only prevents automatic/internal NetBackup image and tape cleanup from occurring. It does not prevent users or scripts from manually expiring images and tapes. Images and tapes will still expire if bpexpdate is manually run or run via a script.

How the touch file works:

The touch file prevents bpsched or nbpem from executing bpexpdate -deassignempty and from executing bptm -delete_expired and from executing bpimage -cleanup.

By default, bpimage -cleanup is run every 12 hours and at the end of an nbpem session. The Image cleanup interval can be tailored in the Clean-up section of the Master server’s Host Properties. bptm -delete_expired is used to clean the media db every ten minutes (or bpsched wake-up interval or Policy Update interval). It deassigns all tapes containing expired images. It does not clean up tapes with rogue fragments such as those from a failed backup. The bpexpdate -deassignempty will clean all expired and invalid fragments and deassign tapes as necessary.

To create the touch file:

On UNIX:

- touch /usr/openv/netbackup/bin/NOexpire on the master server.

On Windows:

<install path>\Netbackup\bin\NOexpire Ensure that the file on Windows does not have a file extension.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

bp.conf options for UNIX clients

1. The main bp.conf file is located in the following location:

/usr/openv/netbackup/bp.conf

NetBackup uses internal software defaults for all options in the bp.conf file, except SERVER. During installation, NetBackup sets the SERVER option to the name of the master server where the software is installed.

See SERVER bp.conf entry for UNIX servers.

If a UNIX system is both a client and a server, both the server and the client options are in the /usr/openv/netbackup/bp.conf file.

Note:

The SERVER option must be in the /usr/openv/netbackup/bp.conf file on all NetBackup UNIX clients. It is also the only required entry in this file.

2. Each nonroot user on a UNIX client can have a personal bp.conf file in their home directory as follows:

$HOME/bp.conf

The options in personal bp.conf files apply only to user operations. During a user operation, NetBackup checks the $HOME/bp.conf file before /usr/openv/netbackup/bp.conf.

Root users do not have personal bp.conf files. NetBackup uses the /usr/openv/netbackup/bp.conf file for root users.

Note:

To change these options on non-UNIX clients, use either the client-user interface or in a configuration file, depending on the client. For instructions, see the online Help in the Backup, Archive, and Restore client interface.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Oracle – RMAN Configuration

1. Install client software on the Oracle DB Server

2. API Library Linking

- Automatic Linking – /usr/openv/netbackup/bin/oracle_link

- Manual Linking – ln -s /usr/openv/netbackup/bin/libobk.so64.1 /usr/openv/netbackup/bin/libobk.so and su – oracle, cd $ORACLE_HOME/lib(64), ln -s /usr/openv/netbackup/bin/libobk.so libobk.so

- Oracle should be shutdown prior to making the link, or re-started after the link is created

3. Create RMAN Script

4. Configure Oracle Type Policy on NBU end and configure the Backup selection as that RMAN Script

Oracle – RMAN Troubleshooting

- DB Side Backup Logs

- “cd /XXXXX/dba/xxxxxx/backups/<DATABASE-SID-NAME>/log”

- NBU Side Backup Logs

- bpdbsbora, bporaexp, bporaimp, dbclient, bphdb

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Enabling automatic path correction

You can configure NetBackup to automatic device path correction. To do so, use the following procedure.

To configure automatic path correction

Use a text editor to open the following file:

install_path\VERITAS\Volmgr\vm.conf

Add the following AUTO_PATH_CORRECTION entry to the file:

AUTO_PATH_CORRECTION = YES

If it already exists but is set to NO, change the value to YES.

Save the file and exit the text editor.

- Adding either ENABLE_AUTO_PATH_CORRECTION or AUTO_PATH_CORRECTION = YES to the vm.conf file accomplishes the same thing. There is no difference between these two vm.conf settings. See one of the above mentioned guides (links provided in the Related Documents section below) for more information on the settings.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

SAN Media Server

Designed for customers who would prefer to utilize their storage area network (SAN) for backup operations instead of their local area network (LAN). This feature enables LANfree data protection with high-performance access to shared resources.

A SAN Media Server is directly connected to the SAN, and is used to backup data directly to disk or tape. A SAN Media Server is unable to backup or manage clients. It only able to backup what is directly attached or mounted to it.

SAN Client

Offloads backup traffic from the LAN and allows for fast backups over the SAN at approximately 150 MB/sec. The SAN client can send data to a variety of NetBackup disk options and allows you to back up and restore to disk over the SAN. Data is sent to media servers via SCSI commands over the SAN rather than TCP/IP over the LAN to optimize performance.

SAN Client’s send their data to a Fibre Transport (FT) Media Server.

To implement the SAN Client, the user needs to deploy a Fiber Channel based SAN between the client and the Media Server. The Server and Clients can each have multiple SAN ports zoned together. In the current release, the feature also requires using disk based storage, preferably Flexible Disk or OpenStorage, for the backend.

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Tunings can be done in EMM.CONF file – /usr/openv/var/global/emm.conf

Beginning in NetBackup 6.0 Maintenance Pack 3 (MP3), nbemm will check for disk full conditions and call nbdb_admin -stop if this condition is encountered. This helps to prevent potential corruption in the Enterprise Media Manager (EMM) database due to the disk filling up.